Category Archives: Uncategorized

Edge Conference 5: London

On Saturday, I attended the 5th installment of the fantastic Edge Conference at the Facebook London offices in Euston. We had in depth discussions about complex problems and set about creating solutions and providing feedback to browser vendors to keep the web moving forwards. This is my third appearance at edge conference; my first was as a panelist at Edge 2 in NYC, and I’m fortunate enough to say that LiquidFrameworks has been very supportive, enabling me to continue to attend.

Edge has no conventional talks – the focus of the conference is on productive discussion and debate, rather than presenting the experiences of a single presenter for the audience to consume. Two types of sessions are run: highly structured panel debates with pre-curated questions and intimate breakout sessions where small groups work through the finer details of a topic in depth. Every person present is provided with the opportunity to present an opinion, and to ask or answer questions raised during the event. To enable such a rich environment for discussion, several tools are used to surface the most relevant opinions in real time: slack, google moderator, twitter and most fun of all, throwable microphones!

The conference ran sessions on Security, Front End Data, Components and Modules and Progressive Enhancement. The breakout sessions also covered related themes; ServiceWorkers, ES6 Patterns and Installable Web Applications.

Security

Yan Zhu’s opening panel introduction revisited this critical topic for the web. She demonstrated how even simple functionality like emoji support can surface XSS vulnerabilities and enumerated several techniques developers can use to promote private and secure communications on the web. The panel discussed opportunities for promoting HTTPS usage and the blockers to HTTPS adoption; 3rd party content (such as ads) forcing mixed content, CDNs and of course, the tedious process of setting up SSL.

Front End Data

I was blown away by Nolan Lawson’s introduction to the topic of front end data. Storage in the browser is gaining a lot of traction and the number of complex use cases for front end data is steadily increasing as the lines between native applications and web applications becomes more blurred. The number of potential storage possibilities in the browser are quite overwhelming; ServiceWorker, ApplicationCache, IndexedDB, WebSql, File System API, FileReader, LocalStorage, SessionStorage, Cookie…and even notifications (as mentioned by Jake Archibald – notifications contain data fragments). I was particularly impressed by the npm in your browser demo which can stored over a gigabyte of data for offline browsing of npm. My biggest concern from this session was that even today there is no way for a developer to provision data storage for an application that is safe from browser eviction when the system is under space constraints. Hopefully this is something that is rectified as the Quota Management API evolves.

Nolan’s slides from this session can be found here.

Components and Modules

The components and modules session took a dual focus; web components and React components. Even with this dual focus, many common themes were discussed, such as performance, optimization, bundling and portability. Former best practice in this space (bundling components together for optimized delivery) were questioned; with the adoption of HTTP/2, unbundled modules provide granular cache invalidation without the same overheads of managing multiple connections as is the case with HTTP. Through intelligent servers, resources can be pushed into browsers based on previous usage patterns, optimizing for usage of resources that are commonly accessed together. It was discussed that tooling in this space should allow for migration over to HTTP/2 without causing an additional overhead in needing to maintain two ways to deliver the application. This conversation led well into the next topic: progressive enhancement.

Progressive Enhancement

Progressive Enhancement, it seems, is a topic always introduced with the question of “who would turn off JavaScript” and Edge Conference was no exception. Fortunately, the discussion led much deeper into this nuanced topic. The panel discussed the complexities of supporting ES6 and ES7 syntax changes, without breaking browsers limited to ES5 (and below). It was interesting to see that the “baseline” of support (below which everything breaks) is a very loose concept and is directly linked to return on investment.

Breakout Sessions

One of the things I love about Edge is the breakout sessions. These sessions provide an opportunity to explore many of the concepts raised in the panel discussions in more depth. With access to such a wealth of knowledge in the room, breakout sessions are a fantastic opportunity to seek insight into complex problems and work with vendors and standards bodies to smooth out rough edges. I attended sessions on Installing web apps, components and front end data. I’d love to see these sessions records in the future as there were parallel sessions I’d have loved to go to but missed out on, however the format of these sessions makes that quite difficult.

Wrap up!

As always, Edge Conference was a blast this year. If you missed out this time, all the videos of the conference will be available on the website and will be professionally captioned and with content search.

All in all, I really enjoyed attending Edge Conference and am looking forward to future installments!

Binding multiple event handlers to JqGrid

[UPDATE: As of version 4.3.2 (April 2012) JqGrid now uses jQuery events as described below]

If you’ve ever used JqGrid for anything more than it’s simple, out-of-the-box defaults, chances are you’ve come across problems related to how JqGrid handles events. As it stands (version 4.3.0 at the time of writing), the JqGrid API allows for one and only one event handler per user event. In particular, this approach can be a significant hurdle to constructing plugins to interpret user interactions with the grid. This post demonstrates an alternative approach using jQuery events, allowing for multiple handlers to be bound to the grid.

A full jQuery event plugin for JqGrid using this approach can be found on my github page: https://github.com/CraigCav/jqGrid.events

The JqGrid Event API

To handle events, JqGrid provides an API for specifying a callback to be executed when a named event occurs:

jQuery("#grid").jqGrid({

...

onSelectRow: function(id){

//do something interesting

},

...

});

This callback can be provided as a option to the grid widget on initialization (as shown) or it can be specified after the grid has been initialized using the setGridParam method. Additionally, it can be set globally by extending the grid defaults:

jQuery.extend(jQuery.jgrid.defaults, {

...

onSelectRow: function (id, selected) {

//do something interesting

},

...

});

Unfortunately this API limits consumers to being able to handle a single callback for each event. Given that as a developer consuming this API, I may wish to be able to provide default settings for handling an event (say, grid load for example) and I may also wish to provide instance specific options for handling the same event, this API is too restrictive to achieve what I require.

Let’s explore an alternative approach to handling user interactions.

jQuery Events

jQuery provides a standard suite of functionality specifically for registering behaviors that take effect when a user interacts with the browser. Of particular interest is the bind method; it allows for an event handler to be attached directly to an element.

$(element).bind(‘click’, function(){

//handle click event

});

A key point to note about the “bind” method is that multiple handlers can be bound to an element. Each handler will be called when the event is triggered for that element.

Applying this mechanism could provide a means to achieve what we need (multiple handlers) that we currently cannot easily achieve using the JqGrid API alone. Unfortunately however, JqGrid does not currently execute any handlers attached in this manner, so our work isn’t over yet.

Triggering Events

We can use jQuery trigger or triggerHandler to work alongside our bind calls to ensure our events get triggered. Perhaps in some later release these methods will be invoked within JqGrid itself (I might submit a patch if I get around to it). Until then, we can wire up the triggers for each interesting event by setting the JqGrid options globally:

jQuery.extend(jQuery.jgrid.defaults, {

...

onSelectAll: function (ids, selected) {

$(this).triggerHandler("selectAll.jqGrid", [ids, selected]);

},

onSelectRow: function (id, selected) {

$(this).triggerHandler("selectRow.jqGrid", [id, selected]);

},

...

etc

...

});

Each of the available JqGrid event callbacks are now used to trigger the appropriate event handlers. Instead of providing a single extension point for handling events, we can now register as many handlers for each event as we like using bind:

$(element).bind(‘selectRow.jqGrid’, function(event, id, selected){

//do something interesting

});

$(element).bind(‘selectRow.jqGrid’, function(event, id, selected){

//and something awesome

});

The full source of my jQuery event plugin for JqGrid can be found on my github page here: https://github.com/CraigCav/jqGrid.events

More refactorings from the trenches

A little earlier this week I came across these JavaScript functions while visiting some code in the application I’m working on:

function view_map(location) {

window.open(‘http://www.google.co.uk/maps?q=‘ + location.value);

return false;

}

function view_directions(form) {

var fromLocation = jQuery(form).find(".from_point").get(0);

var toLocation = jQuery(form).find(".to_point").get(0);

window.open(‘http://www.google.co.uk/maps?q=from:’ + fromLocation.value + ‘ to:’ + toLocation.value);

return false;

}

These methods are used to open links to Google maps* pages.

Take note – to consume either of the JavaScript functions, you have to provide specific DOM elements and the form needs to have other fields stashed away the mark-up.

So let’s see how these were being consumed:

<a href="#" title="Click to show map" onclick="view_map(jQuery(this).closest(‘.journey’).find(‘.to_point’).get(0));" >To</a>

<%–and much further down in the markup–%>

<input name="FromLatLng" class="hidden from_point" type="text" value="<%= Model.FromLatLng %>"/>

<input name="ToLatLng" class="hidden to_point" type="text" value="<%= Model.ToLatLng %>"/>

Yuck. There’s not a lot going for this code.

Particularly nasty is that code will traverse the DOM looking for particular fields. It then builds up a query string based on the field values, and once clicked, open the link in a new window.

Let’s try again.

In this example, the values eventually passed to the JS function,to construct the URI are actually known upfront. So instead, let’s build a small helper extension to construct our Uri from a given location. We’ll hang this helper off of the UrlHelper for convenience:

public static string GoogleMap(this UrlHelper urlHelper, string location)

{

var uri = new UriBuilder("http://www.google.co.uk/maps")

{

Query = string.Format("q={0}", HttpUtility.UrlEncode(location))

};

return uri.ToString();

}

Then, let’s consume it:

<a href="<%= Url.GoogleMap(Model.ToLatLng) %>" target="_blank" title="Click to show map">To</a>

This is much less brittle as we no longer traverse the DOM for no reason. We can also remove the unnecessary JavaScript functions, instead using the target attribute of the anchor to provide the same functionality.

*Google provides means to provide maps inside your application and you should use them – using the approach shown above directs users away from your site.

A refactoring from the trenches

A little earlier this week I came across this JavaScript function while visiting some code in the application I’m working on:

function filter_form(form) {

var form = jQuery(form);

var action = form[0].action;

//get the serialized form data

var serializedform = form.serialize();

//redirect

location.href = action + "?" + serializedform;

}

The function takes a form, serializes it, and adds its values to the URL, and redirects to it. I found the need to do this is a little odd, so I had a look at the calling code:

<form action="<%= Url.Action<SomeController>(x => x.FilterAction()) %>"

method="post"

class="form-filter">

<%–some input fields here–%>

<a href="#" onclick="var form = jQuery(this).closest(‘.form-filter’); filter_form(form); return false;"

title="Filter this"

class="ui-button ui-state-default"

id="filter-form"

rel="filter">Go</a>

</form>

The exhibited behaviour is that clicking the link (styled as a button) will cause a redirect to the target page with the addition of some query parameters.

Although we can see the developers intent (providing filters as part of the query string), the implementation leaves a lot to be desired.

Let’s see if we can do better.

We have a couple of clues here already; the form represents “search filters” and so is idempotent (i.e., causes no side effects).

Instead of a JavaScript driven redirect, why not submit the form using HTTP get:

get: With the HTTP "get" method, the form data set is appended to the URI specified by the action attribute (with a question-mark ("?") as separator) and this new URI is sent to the processing agent.

<form action="<%= Url.Action<SomeController>(x => x.FilterAction()) %>" method="get">

<%–some input fields here–%>

<button title="Filter this" type="submit" value="Go">Go</button>

</form>

This way we get the same behaviour, minus the unnecessary JavaScript.

Processing ModelState errors returned in Json format using Knockout.js

As part of my quest to become a better JavaScript developer I’ve been experimenting with a really neat little JavaScript library called Knockout. For those unfamiliar with Knockout, here’s a very quick overview:

Knockout is a JavaScript library that helps you to create rich, responsive display and editor user interfaces with a clean underlying data model

The rest of this post will pretty much be a brain dump of some of my recent experiments with the Knockout JavaScript library. We’ll take a little look at some typical procedural style usage of JavaScript, and then show how this can be cleaned up using a more declarative style using Knockout.

Returning Model State as Json

To set the scene, I have a small form in the application I’m working on where some complex server-side validation takes place. When this validation takes place, any broken validation rules are transformed into Json and returned to the client.

The implementation that processed the subsequent json result (based on this post) looked a little like this:

Source Code: View

<div id="operationMessage"><ul></ul></div>

Source Code: Scripts

<script type="text/javascript">

function ProcessResult(result) {

$("#operationMessage > ul").empty();

if (!result.Errors) return true;

$(‘#operationMessage’).addClass(‘error’);

for (var err in result.Errors) {

var errorMessage = result.Errors[err];

var message = errorMessage;

$(‘#operationMessage > ul’).append(‘<li> ‘ + message + ‘</li>’);

}

return false;

}

</script>

The function ProcessResult is called subsequent to receiving the response from a server side call using $.ajax(). The result object contains our list of model state errors in json format.

As you can see, the code takes responsibility for:

- Ensuring the UL element of #operationMessage is empty (to ensure only the messages from the current result are displayed)

- Adding a css class “error” if there are error messages to display

- Pulling out each part of the message, and appending it to the DOM

There are a couple of things worth noting here:

- The function needs to know about the mark-up of the page, and the mark-up to use to display messages

- The mark-up in the page needs “hooks” in the form of the id, such that the function can locate where to add messages

In essence, the “what” and the “how” of displaying error messages is all contained in one function; if either of these things are to change we risk impacting both.

Let’s see if we can do better.

View Models

The first step in separating out the concerns of our function, is to define the “what” part – what are trying to display? Let’s make that explicit using a view model. Actually, this is pretty simple for this example; we want to display a list of messages. In traditional JS, this is just an array – but since we’ll be hooking into a little Knockout goodness, we’ll use an observableArray:

var viewModel = {

errors: ko.observableArray([])

};

The observable part is a knockout feature that lets the UI observe and respond to changes. To populate this model, we’ll simply call viewModel.errors(result.Errors) in place of calling ProcessResult.

JQuery Templates

Now we’ve defined our model, we’ll bind to this model using a jquery template. This will form the View, or the “How” part – defining how we want to display our model. First, lets define an element for our template:

<div data-bind=’template: "validationSummaryTemplate"></div>

And then the template itself:

<script type="text/html" id="validationSummaryTemplate, css: { error: errors().length > 0">

<ul>

{{each(i, error) errors}}

<li>

${error}

</li>

{{/each}}

</ul>

</script>

Pretty concise – we’re simply defining the structure of our page in terms of our view model i.e. for each error in the view model, we’ll render a LI tag containing the error message.

Worth noting is the css-binding – remember that we want to add the error class if there are messages to display – that’s how we do it. We could push this logic onto a property of our view model if we like “hasErrors” but since this isn’t complex, or re-used elsewhere in the view, lets keep it here for now.

To apply the binding, we’ll need one final thing. We need to tell knockout to take effect:

ko.applyBindings(viewModel);

Quick Roundup

What have we gained? The “what” and the “how” are now cleanly separated, and the procedural “processing” JavaScript is completely removed. We also no longer need to dig into a JavaScript method if we wish to change the mark-up for our error items (say, to render a table instead of a list).

When writing JavaScript (much like any other language), it’s all too easy to end up with large swathes of procedural code if we aren’t careful in keeping responsibilities focused and separate. The primary problem that face us with procedural code comes when we try to scale out complexity.

I’ve found Knockout to be a really good enabler for applying patterns such as MVVM, which in turn helps us to keep complexity at bay.

The death of mocks?

There’s been a lot of healthy discussion happening on the interwebs recently (actually, a couple of weeks ago – it’s taken far too long for me to finish this post!) regarding the transition away from using mocks within unit tests. The principle cause for this transition, and the primary concern, is that when mocking dependencies to supply indirect inputs to our subject under test, or to observe its indirect outputs, we inadvertently may leak the implementation detail of our subject under test into our unit tests. Leaking implementation details is a bad thing, as it not only detracts from the interesting behaviour of under test, but it also raises the cost of design changes.

Where is the leak?

Jimmy Bogard highlighed the aforementioned issues with his example test for an OrderProcessor:

[Test] public void Should_send_an_email_when_the_order_spec_matches() { // Arrange var client = MockRepository.GenerateMock<ISmtpClient>(); var spec = MockRepository.GenerateMock<IOrderSpec>(); var order = new Order {Status = OrderStatus.New, Total = 500m}; spec.Stub(x => x.IsMatch(order)).Return(true); var orderProcessor = new OrderProcessor(client, spec); // Act orderProcessor.PlaceOrder(order); // Assert client.AssertWasCalled(x => x.Send(null), opt => opt.IgnoreArguments()); order.Status.ShouldEqual(OrderStatus.Submitted); }

The example test exercises an OrderProcessor and asserts that when a large order is placed, a sales person is notified of the large order. In this example, the OrderProcessor takes dependencies on implementations of IOrderSpec and ISmtpClient. Mocks for these interfaces are setup in such a way that they provide indirect inputs to the subject under test (canned responses), and verify indirect outputs (asserting the methods were invoked).

Since the behaviour (notification of large orders) can be satisfied by means other than consuming the IOrderSpec and ISmtpClient dependencies, coupling our unit test these details creates additional friction when altering the design of the order processor. The bottom line is that refactoring our subject under test shouldn’t break our tests because of implementation details that are unimportant to the behaviour under test.

One step forward – test focus

To avoid leaking implementation detail within our unit tests, tests should be focused towards one thing; verifying the observable behaviour. Context-Specification style testing, BDD, and driving tests top-down can be applied to focus our tests on the interesting system behaviour. Taking this approach into account for the OrderProcessor example, the core behaviour may be defined as “Notifying sales when large orders are placed”. Reflecting this in the name of the test may give us:

public void Should_notify_sales_when_the_a_large_order_is_placed()

This technique provides a test that is more focused towards the desired behaviour our the system, however the test name is just the first step – this focus must also be applied to the implementation of the test such that it isn’t coupled to less interesting implementation details.

So how do we execute the subject under test while keeping the test free of this extraneous detail?

Two steps back? Containers, Object Mother, Fixtures and Builders

Subsequent to his previously mentioned post, Jimmy alludes to using an IOC container to provide “real implementations” of components (where these components within the same level of abstraction and do not cross a process boundary or seam). Jimmy also mentions using patterns such as an object mother, fixtures or the builders, along with “real implementations” to simply the creation of indirect inputs.

At this point, my code-spidey-sense started tingling, and I’m know I’m the only person who had this reaction:

My concern here is that we’re pinning blame on mocks for stifling the agility of our design, but I believe there are other factors in play. Replacing 20 lines of mocks for an uninteresting concern with 20 lines of data setup for an uninteresting concern is obviously not the way forward, as it is doesn’t to address the problem.

For example, substituting a “real” order specification for a larger order in place of the mock specification does not overcome the fact that we may want to readdress the design such that we no longer require a specification at all.

I do agree however, with the premise that using components from the same level of abstraction (especially stable constructs such as value objects) is a valid approach for unit testing, and does not go against the definition of a unit test.

A different direction

Experience has taught me to treat verbose test setup as smell that my subject under test has too many responsibilities. In these scenarios, breaking down the steps of the SUT into cohesive pieces often introduces better abstractions, and a cleaner design. Let’s take another look at the OrderProcessor example, and review its concerns.

We defined the core behaviour as “Notifying sales when large orders are placed”. Our concerns (as reflected by the current dependencies) are identifying large orders and notification. Interestingly enough, these concerns have two very different reasons for change; the definition of a large order very domain centred and may change for reasons like customer status or rates of inflation, whereas notification is flatly a reporting concern and may change alongside our UI requirements. It appears then, that our concerns may exist at different levels of abstraction.

Lets assume then that the OrderProcessor is a domain concept and is the aggregate root for the sales process. We’ll remove the notification concern from the equation for now, and handle that outside of the domain layer.

If we treated the identification of a large order as an interesting domain event (using an approach like the one discussed by Udi Dahan here or as discussed by Greg Young here), we may end up with a test like this:

[Test]

public void Should_identify_when_a_large_order_is_submitted()

{

// Arrange

var order = new Order {Total = 500m};

var orderProcessor = new OrderProcessor();

// Act

orderProcessor.PlaceOrder(order);

// Assert

orderProcessor.UncommitedEvents

.First()

.ShouldBe<LargeOrderSubmitted>();

}

Interestingly enough, as a side effect of this design, there is no mocking necessary at this level, and each component we interact with exists within the same level of abstraction (the domain layer). Since there is no additional logic in the order processor other than identifying large orders, we can remove the concept of an OrderSpecification completely.

To maintain the same behaviour in our system, we must ensure Sales are still notified of large orders. Since we have identified this to be a reporting concern, we perform this task outside of the domain layer, in response to the committed domain events:

public void Should_email_sales_when_large_order_is_submitted()

{

// Arrange

var client = MockRepository.GenerateMock<ISmtpClient>();

var salesNotifier = new SalesNotifier(client);

// Act

salesNotifier.Handle(new LargeOrderSubmitted {OrderNumber = 123});

// Assert

client.AssertWasCalled(x => x.Send(null), opt => opt.IgnoreArguments());

}

Since we are crossing a process boundary by sending an email, it’s still beneficial to use a mock here (we don’t really want to spam sales with fake orders). Although the implementation of the SalesNotifier test is concerned with the implementation detail of consuming an ISmtpClient implementation, the cost of this decision is lower since our SalesNotifier performs a single responsibility. This cost is offset by the benefit that we do not cross a process boundary in our test implementation; arguably a price worth paying.

One last example

Interestingly enough, Jimmy Bogard has excellent example of separating concerns across levels of abstraction. By identifying a common pattern in his Asp.Net MVC controller implementation, and separating the responsibilities of WHAT they were doing from HOW they were doing it, his resultant controller design is much simpler. Notice as well how tests for these controller actions no longer require dependencies to be mocked, as they are working within a single level of abstraction; directing traffic within an MVC pipeline…

Conclusion

Tests are great indicators of design problems with out codebase. Complex setup of tests often indicate missing abstractions and too many responsibilities in components of our codebase. When confronted with excessive test-setup, try to take a few steps back and identify the bigger design picture. Try to identify whether your test is exercising code at different abstraction levels. BDD style testing can help focus your tests back to the core behaviour of your system, and following SOLID principles can help alleviate testing friction. Mocks can be extremely useful tools for isolating behaviour, but aren’t always necessary, particularly when our design is loosely coupled.

Uniqueness validation in CQRS Architecture

Note: This post is copied almost verbatim from a comment I left on Jérémie Chassaing’s blog, my apologies if you’ve seen it there already!

I’ve really enjoyed reading a series of posts on CQRS written by Jérémie Chassaing. One I particularly like the idea that there is no such thing as global scope:

Even when we say, “Employee should have different user names”, there is a implicit scope, the Company.

What this gives us is the ability to identify potential Aggregate Roots in a domain – in the above relationship, there is potentially a Company Aggregate Root in play.

Another observation Jérémie’s post really got me thinking.

Instead of having a UserName property on the Employee entity, why not have a UserNames key/value collection on the Company that will give the Employee for a given user name ?

If I’ve understood Udi’s posts on CQRS, I think he’d probably advocate the collection of Usernames being part of the Query-side, rather than the Command side. I’ve heard him mention before that the query side is often used to facilitate the process of choosing a unique username – the query store may check the username as the user is filling in the "new user" form, identifying that a username already exists and suggesting alternatives.

Of course this approach isn’t bullet-proof, and it will still remain the responsibility of another component to handle the enforcing of the constraint.

The choice of WHERE to put this logic is a question that is commonly debated.

Some argue that since uniqueness of usernames is required for technical reasons (identifying a specific user) rather than for business reasons, this logic falls outside of the domain to handle.

Others may argue that this logic should fall in the domain – perhaps under a bounded context responsible managing user accounts.

In either case, since we have a technical problem (concurrency conflicts) and we have several possible solutions, the decision of whether on not they are suitable should probably constrained in conjunction with the expected frequency of the problem occurring. This sounds to me like the kind of thing that would appear in a SLA.

The solution chosen to enforce the uniqueness constraint will then depend on the agreed SLA. Perhaps it is acceptable that a command may fail (perhaps due to the RDBMS rejecting the change) on the few cases of concurrency conflicts – it might only be on a 0.0001% of cases.

Alternatively we may decide that it is unacceptable to allow this to occur due to the frequency of this occurring. We could choose to maintain the list of usernames in the Company aggregate, but scale out our system such that all "new user" requests in the username range A-D are handled by a specific server. If we decide to enforce this constraint outside of our domain, we can offload this work to occur with the command handlers.

What do you think?

Domain Modelling and CQRS

Note: This post originated from an email discussion I had with a colleague. I’ve removed/replaced the specifics of our core domain (hopefully this hasn’t diluted the points I’m trying to make too much!)

While I consider that a focus on capturing intent to be an exciting part of CQRS from a core software design perspective, I believe it is achieving the distillation part of DDD, separating our core domain from supporting domains, that allows us to maximise our potential ROI from the application of CQRS (with event sourcing).

From a business stance, we have chosen as a company to focus on a core domain to differentiate us from our competitors. Our management/marketing team have decided that this area of business provides our advantage over competitor products. From that perspective, it makes sense that we channel our efforts into ensuring that our software model is optimised for this purpose. As a result, we need to spend less effort working on our supporting domains and more effort on our core domain.

I would therefore argue that we should not apply the same level of analysis and design on our supporting domains, as we do on our core model – these areas provide little ROI by comparison.

Whilst I agree that moving away from the CRUD mentality is vital in our core domain, it is not so essential in supporting domains. The level of complexity in our supporting domains is insufficient to justify the costs of applying complex modelling techniques to these areas. Supporting domains could potentially be created using RAD tools, bought off the shelf where possible, or even outsourced. In any of these cases, it is the distillation process that allows us to identify a clean separation between sub-domains – a separation we need to maintain in our code base.

A really interesting article on this can be found here, the concepts from which originate in Eric Evans DDD book.

Domain Driven Design, CQRS and Event Sourcing

It’s taken quite a while, but I think I’ve had a bit of a revelation in really grokking the application of CQRS, Event Sourcing and DDD.

I’ve been considering the application of CQRS to a multi-user collaborative application (actually suite of applications) at the company I work. For some parts of the application, it is really easy to visualise how the application of CQRS would provide great benefits, but for others, I couldn’t quite figure out how the mechanics of such a system could be put into place, and yet maintain a decent user experience.

Let me try to elaborate with a couple of examples:

In one application I work on, a user may make a request for a reservation. I can see this working well under CQRS. The command can be issued expecting to succeed, and the response needn’t be instant; a message informing the user that their request is being processed, and that they will be notified of the outcome should suffice. The application can then take responsibility of checking the request against its business rules, and raising relevant events accordingly (reservation accepted, reservation denied etc). Supporting services could also notify users of the system when other events they might be interested in, become available.

For another scenario in the same application, a user may wish to update their address details. The application must store this information, however the application does not use this information in any way shape or form. It is there for other users to reference. When applying CQRS to this area, we start to see some oddities. A user receiving a notification that their request to update address is being processed seems ridiculous; there is no processing required here. In addition to this, this canonical example of “capturing intent” doesn’t really apply to our domain; in our domain no one cares why the user is updating their address, be it because of a typo, or because of a change of address. This information isn’t interesting in to any of the users of the system.

Then it hit me.

CRUD actions like modifying the contact address of an employee and other ancillary tasks – provide only supporting value in our domain. For all intents and purposes, the contact address of an employee is just reference data; it is there to support our actual domain. Arguably then, there is no benefit for modelling this interaction within our domain model. It’s quite the contrary in fact; diluting our core domain model with uninteresting concerns blurs the focus from what’s important. Paraphrasing Eric Evans’ blue book: anything extraneous makes the Core Domain harder to discern and understand.

Taking this idea further, there can be significant benefit in separating this kind of functionality from actions that belong to our core domain. In code terms, this means that our domain model will not have an “Employee” entity with a collection of type “ContactAddress”. This association isn’t interesting in our core domain. It is likely that it is part of supporting model which could be implemented quickly and effectively using any one of Microsoft’s (or any other manufacturer’s) RAD tools.

In the big blue DDD book, I think Evans describes this separation as a generic sub-domain. In generic/supporting sub-domains there may be little or no business value in applying complex modelling techniques even though the function they provide is necessary to support our core domain. Alternatively, the core-domain of one application may become a supporting domain of another. In either case, the models should be developed, and packaged separately.

Our product, in its various forms, contains enough complexity in its problem domain itself, without complicating things further by tangling up the core domain with supporting concerns. I do not wish to be in the situation (again) where one application needs to know the ins and outs of what is supposed to be another discrete application. If understanding the strengths and limitations of modelling techniques such as CQRS, Event Sourcing and DDD can help me achieve this, then I’m making small steps in the right direction!

NB: this post originated from an email discussion I had with a colleague. I’ve removed/replaced the specifics of our core domain (hopefully this hasn’t diluted the points I’m trying to make too much!)

Becoming a better JavaScript developer

So in my quest to becoming better with JavaScript, I’ve been reading a variety of books, articles, and blogs and I happened across the following site:

The blog itself has a lot of good advice to offer in regards to both structuring your JavaScript into testable and reusable modules, as well as advice on how to apply BDD techniques in testing JavaScript. What really struck me however were the nice little touches on the website itself – a nice little welcome message that contains the usual “about” information, that only appears when you view the site for the first time; a tweet update side bar integrating with tweetboard; and a live chat window:

The next cool thing I found on the same site can be seen here:

http://elijahmanor.com/webdevdotnet/post/Switching-to-the-Strategy-Pattern-in-JavaScript.aspx

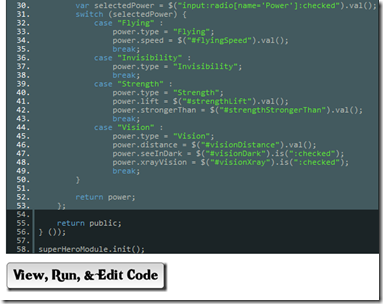

Basically, the author identifies a “code-smell” and applies a pattern to aid maintainability. The cool thing here is the link to “view, run, & edit code” for each example:

…which integrates with an site, http://jsfiddle.net, allowing the sample to be modified and run within the browser:

Very cool stuff indeed.